Requirement

FSQ team approached us to do a greenfield implementation of the Self-Managed Kubernetes cluster and complete automation for @25+ microservices. The ask was to have a Scalable setup to cater to million users in full scale with a highly available setup to ensure uptime.

Problem Statement

FSQ team approached us to do a greenfield implementation of the Self-Managed Kubernetes cluster and complete automation for @25+ microservices. The ask was to have a Scalable setup to cater to million users in full scale with a highly available setup to ensure uptime.Customer also wanted to scale up the users to a million in few minutes, they wanted a reliable cloud and wanted a way to easily scale up/down the nodes as per the demand without manual intervention.Customer were also concerned about securing their servers as per the best practices and monitor non compliance by automating it in some way.Also they did not want regular maintenance patches to affect application performance.

Proposed Solution & Architecture

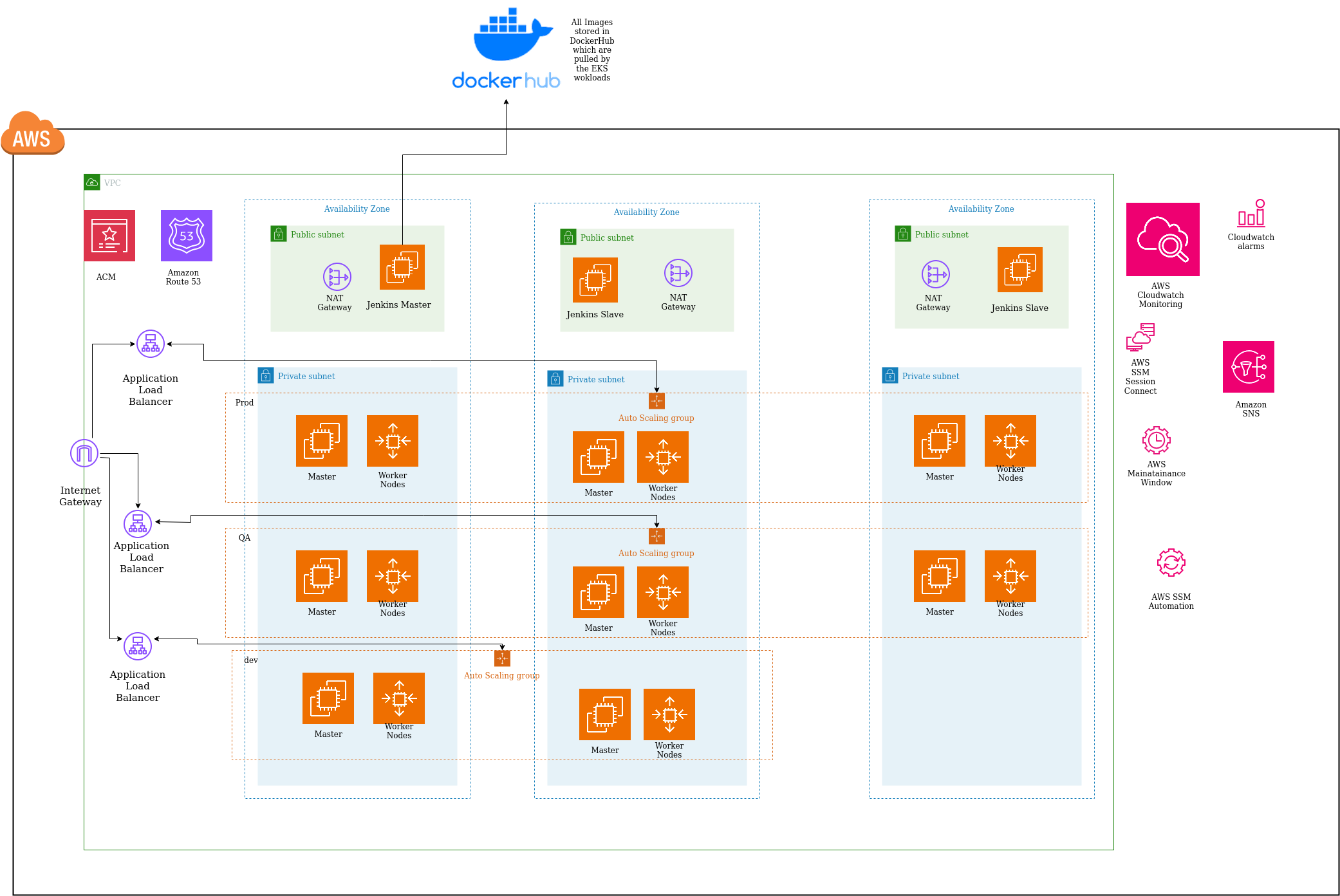

As a result of this approach we had some of the short-lived ec2s in the Autoscaling Groups. Our expert team worked on this requirement and executed multiple sub-projects, which includes -

- -Design and Build Cloud infra using Terraform, ansible and packer Scripts for VPC, 3-node kubernetes masters with multiple worker nodes setup on EC2 with Ubuntu OS

- - The Kubernetes clusters were created with Calico CNI and ContainerD docker runtime on the worker nodes.

- -AWS Cluster autoscaler was configured with ASG, Launch Configurations, AWS SSM, for worker-nodes scalability with standard builds

- -Setup 5 different environments - Dev, QA, Staging, Load-Test & Prod

- -Setup of the Hashicorp Vault, Counsul, ScyllaDB, MinIO, Redis, MySQL STS Clusters with IaC

- -Deployment of HA Nginx ingress for the endpoints

- -25+ microservices pushed with Jenkins (Master Slave nodes),

- -Integrated with cicd pipelines using dockerhub, github,

- -MongoDB instances for each of the microservice

- -Setup and trials with KONG API Gateway

- -Prometheus, Grafana and EFK for traceability and observability along with Cloudwatch,

CloudTrail etcFor worker node autoscaling, we proposed AWS SSM RUN in startup scripts of the node being built to send commands to controlplane using AWS RUN document “AWS-RunShellScript” to generate the token and to join the kubernetes cluster.For providing a safe way to connect to servers it was suggested to use AWS SSM SessionManager to have console and CLI access without exposing open ports and logging of the same.Scheduled maintenance patching activities were done, which were controlled by AWS MaintenanceWindows for easy patching.

Outcome & Success Metrics-

Our expert team worked on this requirement and executed multiple sub-projects, which includes -

-Design and Build Cloud infra using Terraform, ansible and packer Scripts for VPC, 3-node kubernetes masters with multiple worker nodes setup on EC2 with Ubuntu OS

- The Kubernetes clusters were created with Calico CNI and ContainerD docker runtime on the worker nodes.

-AWS Cluster autoscaler was configured with ASG, Launch Configurations, AWS SSM, for worker-nodes scalability with standard builds

-Setup 5 different environments - Dev, QA, Staging, Load-Test & Prod

-Setup of the Hashicorp Vault, Counsul, ScyllaDB, MinIO, Redis, MySQL STS Clusters with IaC

-Deployment of HA Nginx ingress for the endpoints

-25+ microservices pushed with Jenkins (Master Slave nodes),

-Integrated with cicd pipelines using dockerhub, github,

-MongoDB instances for each of the microservice

-Setup and trials with KONG API Gateway

- Prometheus, Grafana and EFK for tracibility and observability alongwith Cloudwatch,

CloudTrail etcEasy scale up of kubernetes node without manual intervention to cater to

millions of users.

Standardised processes & Configuration for secured access.

Automated patching and quick recoverability within maintenance window.