Introduction:

In today's data-driven world, High-Performance Computing (HPC) has become a cornerstone for solving complex problems and accelerating scientific research, engineering simulations, and more. Amazon Web Services (AWS) offers a robust platform for HPC workloads, providing an array of services to power your research and innovation. Let's dive into the fascinating realm of General HPC Architecture on AWS and discover the possibilities it holds! 🚀

The Building Blocks of HPC on AWS

EC2 Instances: 💡

Amazon Elastic Compute Cloud (EC2) provides the computational backbone for your HPC workloads. With a vast array of instance types, you can choose the perfect configuration to meet your computational needs including Compute optimized, Memory optimized, Storage optimized, HPC Optimized for Parallel Processing GPU's - instance families etc. #EC2 #HPC

Elastic Fabric Adapter (EFA): 🚀

EFA which closely resembles the InfiniBand / RoCE Interface is like the secret sauce for HPC workloads. It enhances network performance, reduces latency, and increases throughput, making your simulations and computations lightning-fast. #EFA

Amazon FSx for Lustre: 📚

This managed file system provides high-performance storage for your HPC applications. It's like having a massive library right at your fingertips. #FSx #HPC

AWS ParallelCluster: 🔄

This open-source HPC cluster management tool simplifies the setup, scaling, and management of HPC clusters. It's like having an orchestra conductor for your computational symphony. #ParallelCluster

Accelerating Your Research

AWS Batch: ⚙️

Need to run batch computing jobs? AWS Batch is your go-to solution for dynamic scaling, job scheduling, and resource management. It's like having a personal assistant for managing your computational tasks. #AWSBatch

Amazon SageMaker: 🧠

For machine learning and AI workloads, SageMaker provides a comprehensive environment for training and deploying models at scale. It's like having your own AI laboratory. #SageMaker #AI

AWS Lambda: 🌐

Lambda lets you run code without provisioning or managing servers. Use it for automating tasks and responding to events, allowing you to focus on your research. It's like having a digital butler for repetitive tasks. #Lambda

The Power of Scalability

Amazon HPC Pack: 📈

Scaling your HPC workloads is a breeze with Amazon HPC Pack, which automates the deployment and configuration of HPC clusters, making it as easy as playing with building blocks. #HPCPack

AWS Auto Scaling: 🚗

With Auto Scaling, you can automatically adjust the capacity of your EC2 instances to match your workload. It's like having a self-driving car for your computing resources. #AutoScaling

Elastic Load Balancing: 🎯

Distribute incoming traffic across multiple targets to ensure high availability and fault tolerance for your HPC applications. It's like having a star quarterback on your team. #LoadBalancing

Security and Compliance

AWS Identity and Access Management (IAM): 🔒

Protect your HPC resources with AWS IAM, which allows you to control who can access your resources and what actions they can perform. It's like having a digital bouncer at the door. #IAM #Security

Amazon VPC: 🌐

Amazon Virtual Private Cloud ensures that your HPC workloads are isolated and secure, just like working in a highly guarded laboratory. #VPC #Security

AWS Config: 📜

Maintain compliance with AWS Config by continuously monitoring and recording your AWS resource configurations. It's like having an auditor on your team. #Config #Compliance

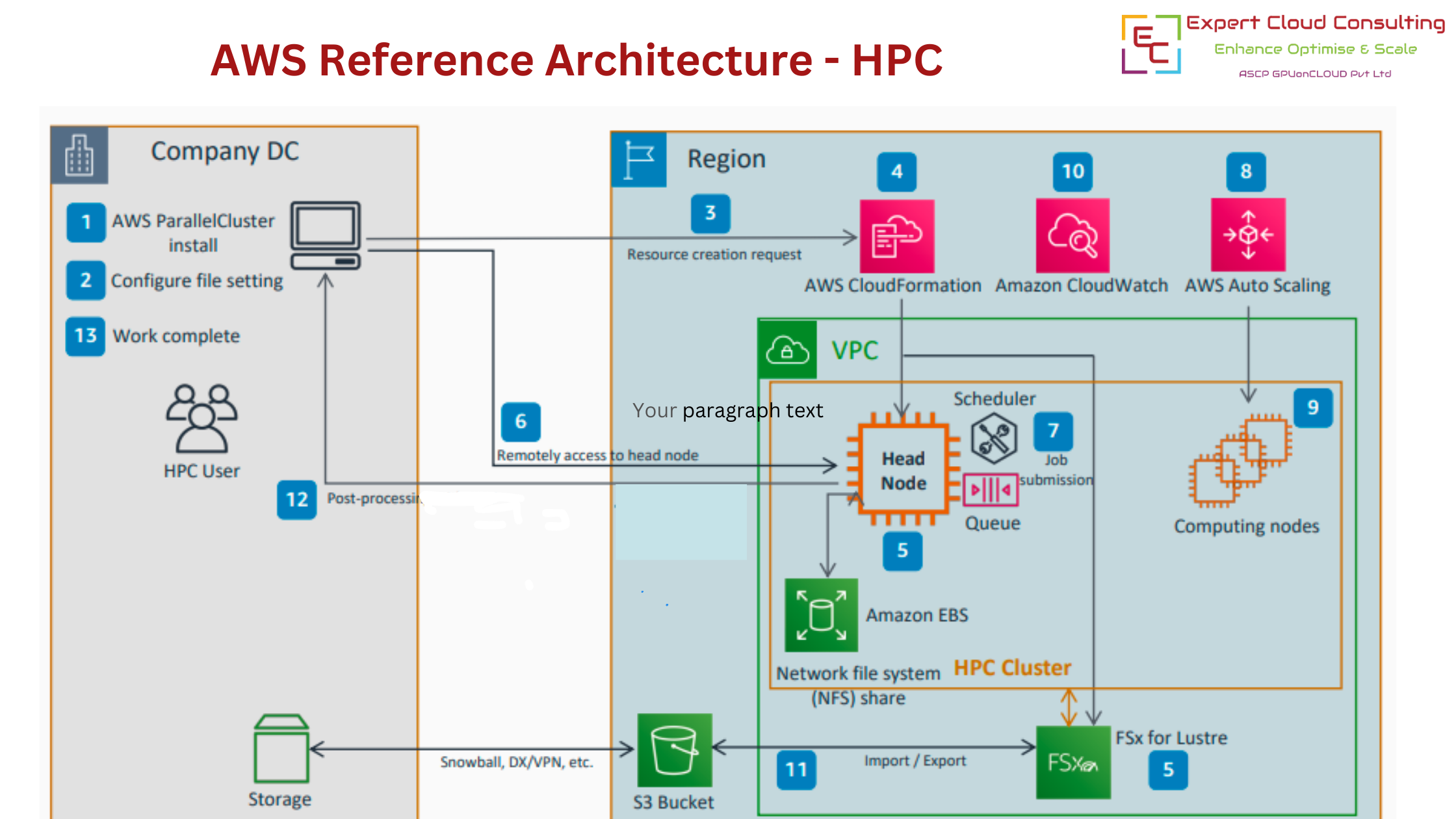

Here are the AWS Reference Architecture Steps for ready reference-

- Installs AWS Parallel Cluster, which is used to provision HPC resources.

- Use the installed AWS ParallelCluster to define the resource you want to provision in the form of a script. This is called the “configure file”.

- Provision the configure file defined in Step 2 with an AWS ParallelCluster command.

- The real provisioning of resources is performed through an infrastructure as code (IaC) service called AWS CloudFormation linked with AWS ParallelCluster.

- When provisioning is complete, the defined resources are created. A head node (including a defined scheduler) and a file system (Amazon FSx for Lustre) are created.

- To perform the simulation, the user connects to the created head node through a secure shell protocol (SSH) or DCV connection.

- Create a job script on the head node and submit it to the scheduler already installed on the head node. The job is queued until it is processed.

- The amount of computing power defined in the job script is allocated to process the job.

- A compute cluster to process the job in the queue is created, and computing is performed.

- The created cluster nodes and various HPC resources are monitored through a monitoring service called Amazon CloudWatch.

- The processed results can be stored in Amazon Simple Storage Service (Amazon S3), and sent to the on-premises environment if necessary.

- If necessary, you can do post-processing with DCV without transmitting the result data on premises.

- When there are no more processes, the cluster is deleted.

Conclusion

General HPC Architecture on AWS opens up a world of possibilities for researchers, scientists, engineers, and innovators. With its scalable and flexible infrastructure, you can explore new frontiers in science and technology, all while ensuring security and compliance. So, unleash the power of AWS for your HPC workloads and watch your research take flight! 🌟 #AWSHPC #Innovation #Science #HPCOnAWS #AWS #HPC #CloudComputing#Technology#Innovation#HPCInnovation#AWSInnovation#ScientificComputing#ResearchComputing#MediaRendering#HPCCommunity#CuttingEdgeComputing#GPUOptimizedInstances#AWSCompute#HPCApplications#AWSUpdates#HPCSolutions